Wow! Automation! Hot Topic at last. Or not?

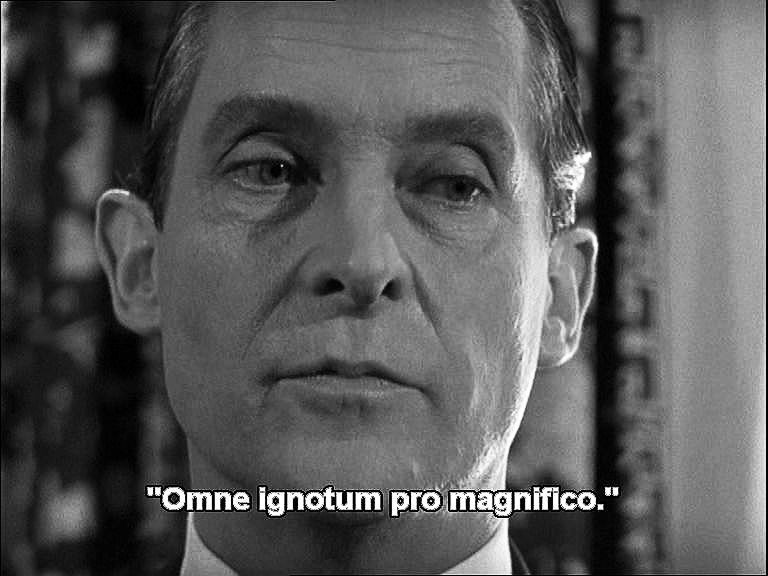

I’m a fan of Sherlock Holmes stories. There is a recurring plot line that happens at various instances, where Sherlock would give amazing insight about a person just by looking at the person for a minute. On one such instance, Sherlock explains how he came to his conclusions, listening to which the person exclaims – “Oh! I thought there is some cleverness to it. It was so simple!!”. Offended by the remark, Sherlock replies – “Omne Ignotum Pro Magnifico (est)!“.

Watson quickly translates it to – “Everything becomes commonplace after explanation” which Sherlock doesn’t like and says it is a loose translation.

The above is a snippet from my article in 2012.

This Latin phrase by Tacitus means:

“Everything unknown appears magnificent”.

As a testing professional, which path should we choose – the path to look magnificent by hiding knowledge – the secrets of our skills or the path to enable others to look beyond the magnificence & learn how to do it by breaking complexity into simple parts?

The problem is that magnificence sells. Explanations don’t.

Another related problem. Clarity sells. Ambiguity doesn’t.

Nevertheless, I’ll try to position an Explanation. I’ll try to position the Ambiguity.

You have been warned. This article is not about magnificence of automation in testing. It’s about the raw details – the lumps, the nuggets.

Automation in Testing vs Test Automation

There is no difference. Automation in Testing is sort of a Think-Phrase for me. It’s reminder for me that:

Automation is about using technology to execute an activity in testing with no human intervention, more often than not, partially solving the activity/problem just like humans solve problems partially (more on the human aspect here: Metacognition: Biases, Problems, Abstractions and Variables).

I carry two mutually contradicting thoughts in my mind: Nothing can be automated. Everything can be automated. I am pretty peaceful and happy with this contradiction.

The phrase Automation in Testing works better for me than test automation. If both these phrases mean the same to you, ignore. If any other phrase makes better sense, pick that. If this version of discussion does not make sense, well, you know what to do :-).

Let’s get started. I’ll share some examples, starting with something I didn’t do but which changed my mind about automation, luckily at a very early stage.

For this article, I will write less about what we usually relate to as automation. I’ll mention it. But won’t delve into it.

Some Real Examples from My Journey To Indicate Why I Say What I Say

1. Automated Audit of Database Test Design Spreadsheets

I was less than 1 year into testing. My first job. My second project.

It was a database migration project from IMS DB (hierarchical) to DB2 (RDBMS) for one of the largest insurance companies out there. The test cases essentially expressed that for an X scenario, when data is migrated, which new entries of data will be created in which data tables. We did this test design in the absence of the DB2 version in place by going through logical diagrams and collaboration with developers. (Sounds like Agile? Well it was the year 2004. Agile was around then. But this company was a CMM Level 5 company. I hope you know what I mean :-)).

One big problem that we faced was a lot of mistakes in column names, missed values, the structure of the DB entries etc. It was a team of 50-60 testers at its peak (I remember looking after work of 20+ engineers, something which was strange as my designation was a Trainee). Mistakes were prevalent. Reviews were costly.

One of the young engineers, who was only a few months experienced, found a solution. He spent a couple of days and wrote an Excel based Macro to do the structural audit of test design spreadsheets. It highlighted the rows in red where it didn’t understand, made corrections which were obvious etc.

That’s the first time I saw automation.

Was it automation in testing?

2. Dynamic App GUI Test Automation

Fortunately this lasted for only a few months for me, before it could blind me and take into the world for GUI based test automation which was pretty fashionable in those days – the days when WinRunner was just getting obsolete and replaced by QTP.

My work was with neither of them. My work was with SilkTest. We could not use record-playback (thank God) and hence had to learn and implemented dynamic identification of elements as each user could have a completely different set of policies and options etc.

This very well fits the way automation is seen in testing.

3. Automation of Daily Status Report, Parsing & Plotting performance monitoring data

I switched to performance testing role, and did various projects using WebLoad, JMeter, LoadRunner, OpenSTA. These fit the usual automation meaning. So, no details here.

During this time, when I became a team lead, I was asked to send daily status report. At times I had 12-14 people reporting to me. I loved technical work. This activity started taking 1.5 hours-2 hours per day.

I wrote Excel Macros (see the impact of that few months experienced tester?) to automate this, establish a process. This was reduced to 5 minutes :-). I was the only lead sending timely reports. When my manager observed this, asked me how. I showed the solution. It became a department wide solution.

Is it automation in testing?

During the same time I also learned Perl and contributed to performance test reporting system.

Because of the politics in the team, I remember a colleague and close friend caught up in a project location somewhere and asked me what should he do. He was supposed to parse the output of various server side monitoring tools to analyse graphs.

I wrote the parser for these 3-4 monitoring commands and plotting logic in Perl.

Is it automation in testing?

4. Generation of Pseudo-malware Samples for Stress Testing

I switched to an anti-malware company after my performance testing role.

For a test situation where a change in encryption logic and its relation to performance was to be studied.

I wrote logic to automatically generate pseudo-/fake-malware and corresponding signatures to conduct the test, as well as to monitor the system to correlate resource metrics.

Is it automation in testing?

5. Automated Parsing of Exploratory Testing session reports

When I was introduced to SBTM by James Bach and Michael Bolton, I wanted to introduce it to my team. To do it in an organised way, I developed a parser-friendly session report format and automation to club the session reports from multiple individuals.

Is it automation in testing?

6. General Purpose Test Automation Frameworks

I had developed 2 of them in succession. The second one was my parting work from my employment to become an independent consultant.

This was sometime in the years 2010-2011.

Both were Python based. The second one was called TERMS. It had a management interface to execute tests on ESX server based virtual machines, supported black box and white box tests (by testers) written in Python/Perl, unit tests (by developers) in C using CPPUnit & CPPUnitLite, code coverage metrics separately for these using BullsEye, had a result comparison feature and so on.

Sounds fancy? Yes, but what you can note is that there is no fancy, shiny library here. It was all done using basic Python programming.

7. Automation of Data Corruption Testing/File Fuzzing

I have done some deep automation around binary data packing/unpacking and doing protocol-aware fuzzing from scratch in Python.

I’ll not delve into it here as the terms are not widely understood.

This is usually something not done at all by testers.

8. Automation of Code Generation and Code Conversion

This is something I did quite a few times once I got a hang of it.

When I had to position Framework 2 as superior to Framework 1 in the above story, (although both were my work), I faced resistance. The reason? “It will take time for the tests to be converted. Team is busy.”

I wrote code to convert all tests from one framework to another.

Is it automation in Testing?

There was a team later who wanted me and my team to generate scripts on top of the Test classes. Solution? I wrote a code generator.

Arjuna, my open source framework in Python, started out as a Java project and it was that way for many years. When I decided to re-write it in Python, what did I do? I created a code converter from Java to Python. Now that with slight modifications I was done with the Python version in a week or so, I was able to focus on building advanced features which were the primary reason for this shift.

In another instance, I had identified more than 80% redundant code in a test automation framework implementation at a client place. Solution? Created a code converter from Java to Java.

Are these cases of automation in testing?

So, What Automation in Testing Really Is?

My suggestion? Don’t think too much on putting it into a bucket.

Look at the problem. Can you break the problem into pieces – pieces which can be delegated to technology and pieces which are better fit for a human? Now, think about automating the former. Don’t expect perfection. The solution will be imperfect just like human testing is imperfect. That does not mean we should not do it.

The problem I see is when we start using terms like “end-to-end”, “one-click” and so on. This self-imposed unrealistic expectation will do only one of the two things. Those who care about testing will stay away from automation. Those who don’t care about testing will keep making fools of others.

When you think automation in testing, think Partial Automation.

This one is for the good, sane voices: Please carefully assess your messages. Are you passing a subtle message against automation/technology? Are you passing a subtle message against human role in testing? I am not talking about a single post or an article. I am talking about the totality of it in the public eye.

I am not sure whether I am making my point clear. That’s ok. But the following contradiction somehow in its exact form gives clarity to me:

Nothing can be automated. Everything can be automated.

The first message tells me to be skeptical, it tells me to not to be of a “replace-mentality”. When you automate, you’ll end up missing pieces. Unless those pieces are taken care of by humans, you are not really improving anything, rather reducing what you were doing earlier.

The second message tells me to find opportunities. It also tells me automation can at times do what humans can not. There are many things which humans are not capable of. Automation by its nature can do them. No, I am not talking of reduction in time. I am talking of capability. Humans are NOT capable to do certain stuff in testing. So, unless you are doing automation, you are missing on things.

The Thin Line

Beyond the differentiation between what humans do, what automation does, if we are too particular about what is what, or worse yet what is to be called what, we will miss important opportunities. Here’s an example:

When you are reading code to find issues, it is called Review. When you use a tool, it’s called Static Analysis. That’s what you will be told. Can’t you use tools/small scripts/regular expressions in your editor to partially automate stuff during the review? Shouldn’t you subject the output of the static analysis to a human evaluation? So, think again. What were the terms telling you? And what are these word-level debates really doing for you?

When I write articles of this nature, I write at the risk of inviting wrath from people who are more passionate about either of these paths.

To me there are no absolute sides to take. I serve Testing as my profession.

That’s all for now.

Leave a comment