I’ve created a Custom GPT: “Linguistik”, which is available as a public GPT which can be accessed by anyone with a ChatGPT paid account (GPT-4).

LLMs are supposed to understand natural (-ish) language. So, I thought to do an experiment to create a custom GPT which uses linguistics as the basis for generating questions from vague requirements. Doing it without a proper NLP component is limiting, but let’s see how it fairs.

You can access it here with ChatGPT account:

Usage/What to Expect

———————-

1. Say Hello (Would love to hear about its variants that you try :-)) or click Let’s Get Started! suggestion box.

2. Follow the instruction from its response, to give a few sentences about a vague requirement (synthetic or abstracted from your work). It can be a single sentence.

3. It will ask you about you, not personal data, but more about what do you want to do. Your answer configures its behaviour indirectly. Your response controls the complexity of questions, how creative it gets, focus areas etc.

4. It will start generating questions 15-20 at a time, pause and ask you what to do. You can ask it to generate more and it should oblige. The nature of these questions are governed by what you wrote in step 3. You can change this after each response (e.g. Complexity = Low/Medium/High, Focus = Security, Creativity = Low/Medium/High etc.)

5. “download” command will consolidate all questions into a CSV format (in progress).

Some Internal Details

Apart from the above, you can try other tweaks which are not explicitly instructed to this GPT. Following are some further internal details:

1. It takes a vague requirement and does a high level linguistic analysis on it along with creating a question table based on multi-clause statements.

2. It does a high level contextual analysis based on your requirement and the way you describe yourself.

3. Based on the above it creates 7 internal tables. As expected from an LLM, these tables are not deterministic, but there is some level of repeatability that Linguistik is able to achieve. All these tables are internal to the GPT so that you can focus on ideas rather than the technique.

4. The 4 auto-determined parameters can be explicitly & independently controlled as well (e.g. Complexity = <value>):

– Complexity: Governs the structural complexity of the question (Low, Medium, High, Highest)

– Creativity: Governs the nature of questions from the obvious ones to the corner cases (Low, Medium, High, Highest)

– Role: Who you are. The default is (ahem …) Tester. But you can mention any role.

– Quality Attributes: Default is ALL. You can carefully control it. E.g. in a recent demo I was sitting with a DevOps Engineer whose requirement was about adoption in the organisation of the dependency resolution measures. So, we tried “Adoptability” and role as DevOps Engineer. The Complexity attribute also has an indirect relationship here.

For newbies in testing, just saying “skip” is ok for the personality question “Linguistik” asks.

But if you are serious, try setting Complexity = Highest, Creativity = Highest, describe your role and focus properly, mention exact quality perspective (s) you are interested in. You can do all of them or any combination. It’s a non-deterministic tech, but from controllability perspective, you are looking at 100s of combinations here to control it.LLMs have been often blamed for not being curious, or not interactive or not doing contextual interpretation.

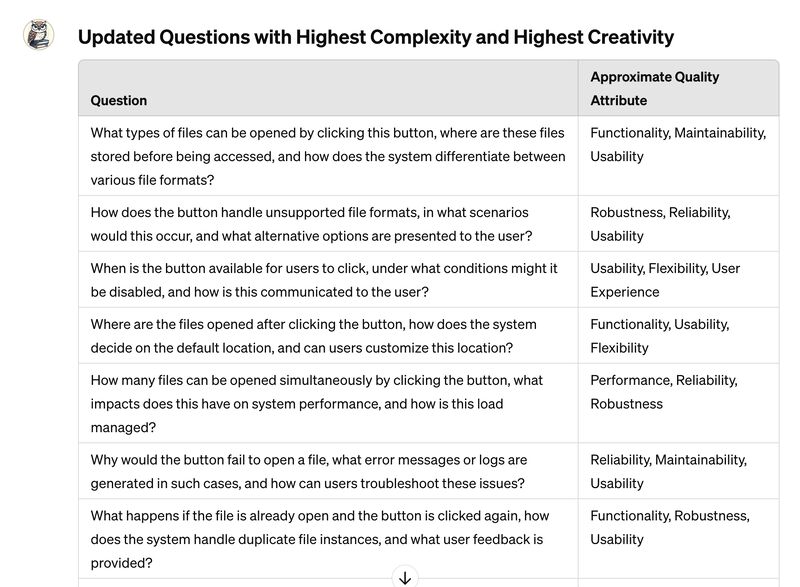

I gave Linguistik the following vague requirement, and switched complexity and creativity to highest.“By clicking this button, a user can open files.” The attached image contains some of the initial few questions it generated. (The default Role is Tester, and all quality attributes are considered).

Try Linguistik and see how it can be simulated. Let’s forget the argument of LLMs being sentient, intelligent etc or the other way round. These are maxims. I don’t think they are, but that doesn’t take away the fact that they can be useful, if we want them to be.

Make up your mind. Do you want to test LLMs or do you want to use LLMs for testing. There are some overlaps, of course. But if you are mixing these two goals too much – you would do neither effectively. We need to move from hype and blatant criticism towards critique – that’s what testing is about (Hint: If as a tester you appreciate a technology or a feature, you are still a tester, and that’s a part of testing thought process as well).

What I’d Like to Hear

———————

– Try out at least 2 problems: One in your comfort zone and one that you have absolutely no idea about. Come up with a list of questions yourself so that you can compare to what extent this GPT is really useful. I suspect and expect this experience to be mixed.

– Overall experience and suggestions. You can DM me, or write in comments – whatever works for you.

It’s in a very early stage – 2 days worth of work. So, in case you are interested please explore it and share your findings. While you do this, keep in mind, it’s still an LLM – customised or not – it has its limitations. So, take charge.

PS: It’s a tool to elaborate on vague requirements and generate test ideas. It’s an open ended tool, so please approach it with an open mind. Your goal is not to find which question is least interesting. Your goal is to find whether some questions were helpful and to what extent. Of course, if it is of very little help for testers at different levels of knowledge, then this is a failed experiment 😊.

Leave a comment